Create Optimal Hotel Recommendations

Date: 28 Jan 2021 Tag(s): Jupyter Notebook, Python, Pandas, Profiling, Hotel, Expedia, Models, PCA, Hyperparameter Categories: Machine Learning, ML, Classification Download Project: 7.57 MB - Zip ArchiveOverview #

The dataset and problem were actually part of a Kaggle competition set forth by Expedia to improve their hotel recommendation algorithm. I did not take part in the competition as I didn’t know about it at the time but it still seemed like an interesting problem to tackle. My notebook goes about this problem by creating multiple different classification models, fine-tuning their hyperparameters and comparing their results so see which one preformed the best with the data.

This notebook explores several different classification models (Random Forest and Decision Tree) for selecting the best hotel recommendations for Expedia users.

View Jupyter Notebook #

The notebook goes step-by-step through the project, please follow the directions and cell order if you would like to replicate the results.

- Click the "View Notebook" button to open the rendered notebook in a new tab

- Click the "GitHub" button to view the project in the GitHub portfolio repo

Methodology #

Pandas Profiling was used to profile the dataset to get an understanding of what I was working with and PCA (Principal Component Analysis) feature reduction was needed to vastly reduce the number of columns so that I could complete the modeling in a reasonable amount of time.

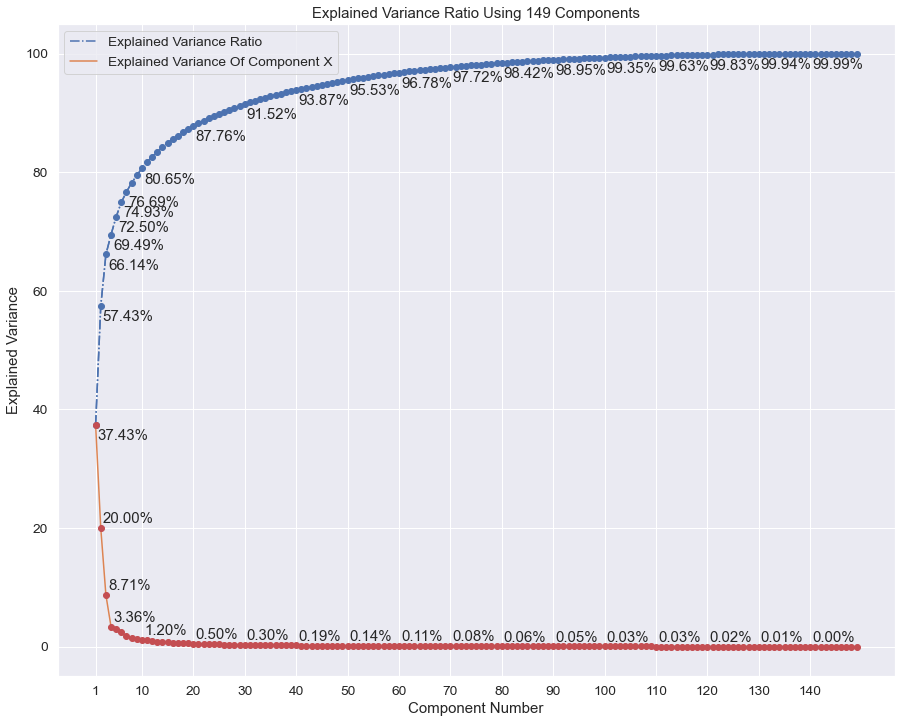

PCA - Explained Variance #

The graph below shows that of the 149 total components, using only the first 10 will account for almost 81% of the destination column data. As a result, using the first 10 components to explain the destination column data would greatly reduce the amount of processing and modeling time.

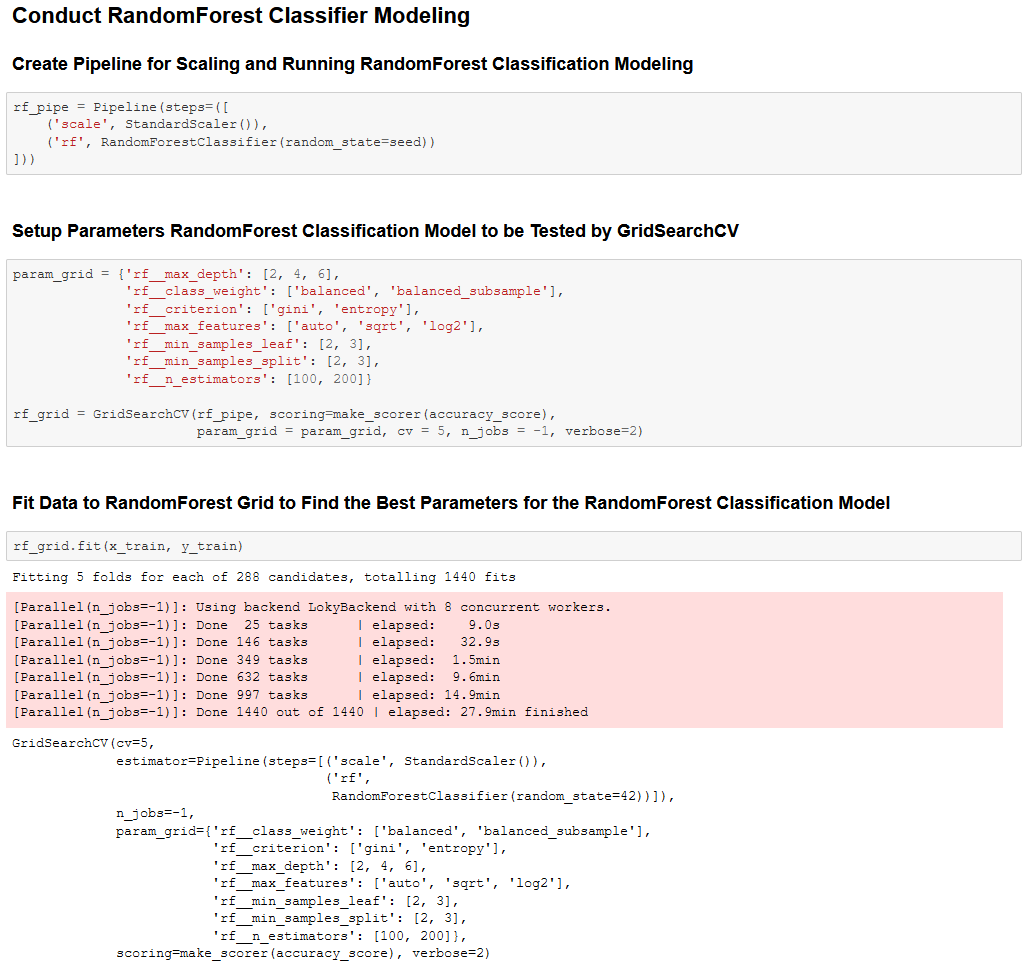

Tuning Model Hyperparameters #

The python library sklearn has a function that allows you to run your models with a multitude of different parameters to find the best ones for your model. The function in question is GridSearchCV, it makes fine-tuning models and getting the best possible hyperparameters so much easier.

GridSearchCV Example #

Below is an excerpt from the linked notebook for this project on what GridSearchCV looks like in practice.

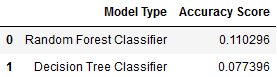

Results - Random Forest #

The best model ended up being a Random Forest Classifier with an accuracy score of ~0.11:

With the following parameters:

Best Random Forest Classifier Parameters

========================================

class_weight: balanced

criterion: entropy

max_depth: 6

max_features: sqrt

min_samples_leaf: 3

min_samples_split: 3

n_estimators: 200

After all of my analysis, my modeling results were unfortunately not very good, I ended up with a final accuracy score

of 0.2666 after training on the entire dataset. The score would most likely improve with a larger sample size and a

differently tuned model (and a stronger computer to train the model). I had originally decided to use

XGBoostbut the model training was taking far

too long than what was feasible for me (even with the small sample size) and thus was forced to change to a different model.